The internet is a breeding ground for rumors and leaks, especially when it comes to highly anticipated movies. Recently, a trailer for a supposed upcoming Avengers film, titled “Avengers Doomsday,” sent ripples of excitement (and perhaps a little confusion) across the Marvel fandom. The visuals looked impressive, the stakes seemed high—it had all the hallmarks of a genuine sneak peek.

However, the excitement soon turned to revelation: this “trailer” was not official. It was entirely a creation of artificial intelligence. While the technology behind such realistic fakes is fascinating (and a little unsettling), the story took a darker turn when it was discovered that major Hollywood studios were allegedly profiting from these AI-generated trailers on YouTube.

Yes, you read that right. Despite publicly voicing concerns about the rise of AI in creative fields—often citing potential job displacement for artists and the degradation of artistic integrity—these very studios were seemingly happy to rake in views and ad revenue from AI-generated content on their YouTube channels. The irony was thick enough to cut with a vibranium shield.

The outrage that followed was entirely justified. Fans felt misled, and the hypocrisy of studios condemning AI in one breath while quietly benefiting from it in another was glaring. It raised serious ethical questions:

- Transparency: Should studios be transparent about the origin of promotional material on their platforms?

- Misinformation: AI-generated trailers, especially for non-existent projects, can spread misinformation and build false expectations.

- Ethical Implications: Is it ethical for studios to profit from technology they publicly criticize, especially when that technology has the potential to impact the livelihoods of creatives?

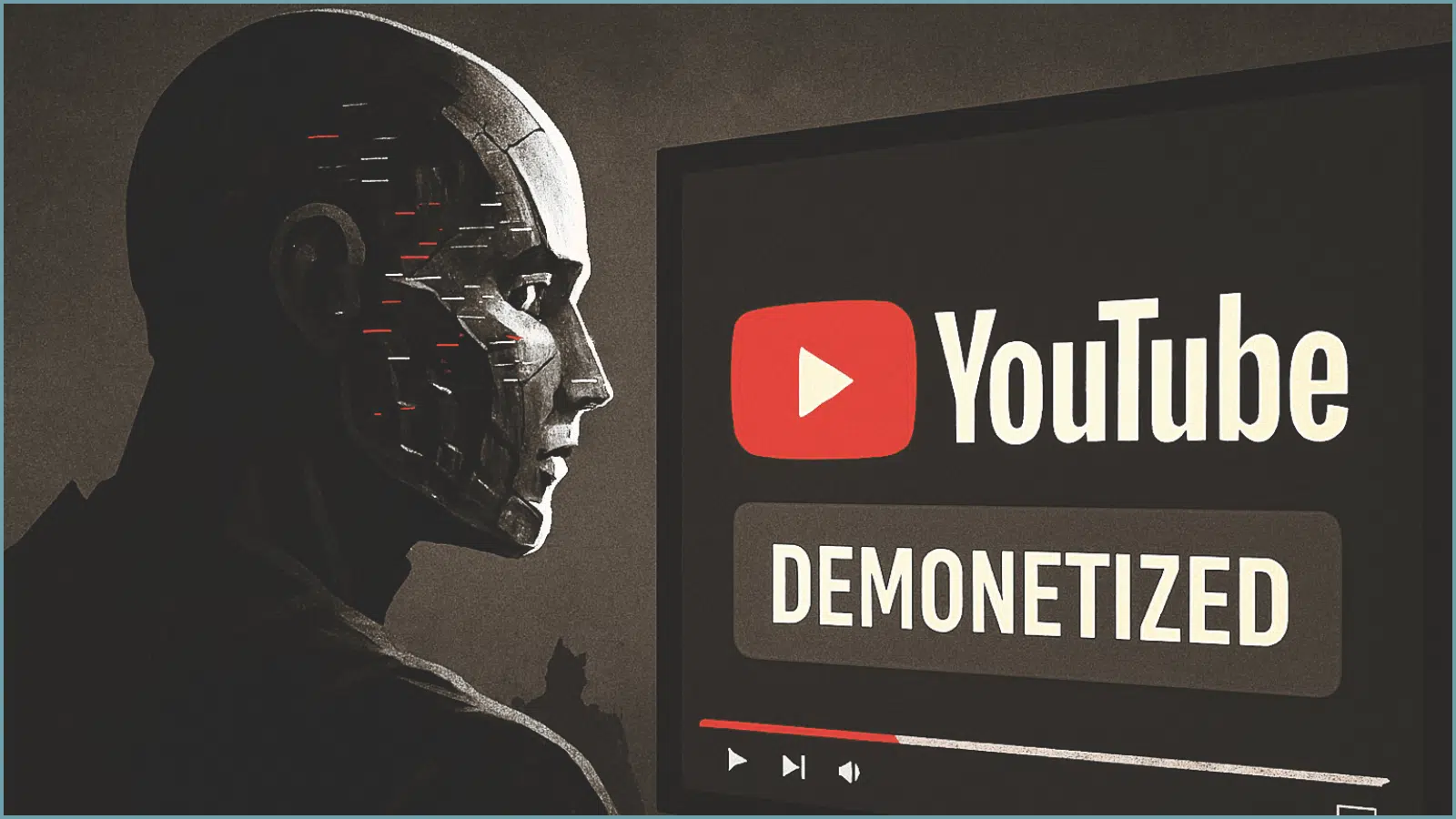

The backlash didn't go unnoticed. In a swift response, YouTube decided to demonetize AI-generated trailers. This move sent a clear message that the platform would not facilitate the profiting from misleading AI content, especially when it comes from established entities like major movie studios.

This whole saga serves as a stark reminder of the rapidly evolving landscape of content creation and consumption. AI is no longer a distant sci-fi concept; it’s actively shaping how we experience media. While AI tools can undoubtedly be powerful and beneficial, their use, especially in a commercial context by powerful corporations, demands careful consideration and ethical responsibility.

The “Avengers Doomsday” AI trailer incident might be a singular event, but it opens up a broader conversation about the role of AI in entertainment marketing, the ethics of profiting from it, and the need for greater transparency from the industry. As audiences become more savvy about AI-generated content, studios will need to tread carefully to maintain trust and avoid accusations of duplicity.

What are your thoughts on this situation? Do you think studios have a responsibility to be more transparent about their use of AI in marketing? Share your opinions in the comments below!