Gemini is a brand-new type of AI that can do things we could only dream of before. It’s not like other chatbots or algorithms.

Google has indirectly challenged OpenAI ChatGPT with their multimodal Gemini AI, which means Gemini AI has text, audio, and visual modalities.

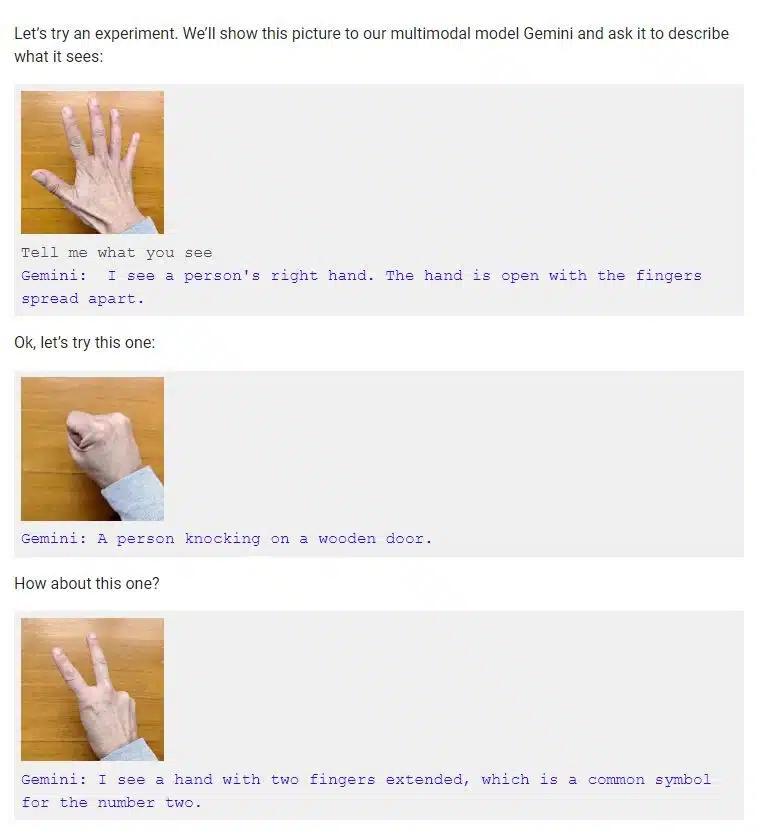

Proof of Google FAKED Gemini AI Results

There is a video that dropped on the Google channel titled “Hands-on with Gemini: Interacting with multimodal AI“

A user and a Gemini AI chatbot can be heard talking in the six-minute video. The video also shows Gemini’s ability to tell the difference between pictures and real things. It was amazing that Gemini could read out loud descriptions of drawings of ducks and tell the difference between a drawing of a duck and a rubber duck, among other things.

Gemini’s ability to distinguish between pictures and real objects was truly remarkable.

Following the launch, Google confirmed in Bloomberg that the video was edited. “For the purposes of this demo, latency has been reduced and Gemini outputs have been shortened for brevity.” written in the video description. In other words, it took longer for each answer than it looked in the video.

To be honest, the demo wasn’t done in real-time or in voice either. Bloomberg Opinion asked Google about the video, and a spokesperson said it was made “using still image frames from the footage and prompting via text.” The spokesperson also pointed to a site where people could use photos of their hands, drawings, or other items to interact with Gemini. That is, the voice in the demo was telling Gemini what to do by reading out voice-made prompts and showing them still pictures. Gemini could have a smooth-voiced conversation with a person while it watched and reacted to the world around it in real-time. That’s not at all what Google seemed to be saying.

The absence of information regarding the specific type of Gemini used in the video leaves room for speculation. It is possible that the demo showcased Gemini Ultra, a more advanced version that has not been released yet. However, Google did not explicitly mention this in the video, making it difficult to confirm. Google claims Gemini Ultra achieved a remarkable score of 90.0% on the MMLU (massive multitask language understanding) test.

This kind of lying about details is part of Google’s larger marketing plan. The company wants us to remember that it has one of the biggest AI research teams in the world and more data than anyone else.